One Year Later: AI Chatbots Show Progress on Free Speech — But Some Concerns Remain

Generative AI platforms are getting better at allowing free speech—but inconsistently, and not everywhere.

Much of the conversation around artificial intelligence has centered on its potential harm, with a chorus of prominent voices warning that AI poses a threat to democracy. But what the doomsayers often overlook is that AI is rapidly becoming a vital tool for expression and access to information, with the potential to significantly reshape the landscape of free speech.

In India, users turned to xAI's chatbot, Grok, to craft pointed critiques of Prime Minister Narendra Modi, showcasing AI's ability to amplify dissenting voices in environments where traditional media faces constraints. In Venezuela, journalists used AI avatars to protect their identities amid government crackdowns. In Belarus, dissidents created a fictional, AI-powered political candidate — who cannot be arrested — to criticize the government and resist repression. French President Emmanuel Macron even posted deepfakes of himself to promote the Paris AI summit.

Still, a recent survey by The Future of Free Speech found low public support for allowing AI tools to generate content that might offend or insult. Support for AI creating deepfakes of politicians received the lowest support with less than 40 percent among all 33 countries surveyed. In the United States, support stood at just 21 percent—and in France, even lower at 13 percent. In light of this, it is unsurprising that the companies behind major AI chatbots have not committed to upholding freedom of expression and access to information, as we found in a report last year.

However, since the start of this year, the debate surrounding free speech and artificial intelligence has taken a dramatic turn. In 2023, OpenAI and Meta were among the many AI developers cautious about the potential risks of generative AI, emphasizing the dangers of misinformation, bias, and unethical content generation. However, coinciding with the change of administration in the United States, these companies have recently announced significant policy shifts, now advocating for greater freedom of expression in AI-generated content and fewer regulatory guardrails.

Meanwhile, DeepSeek has rapidly become one of the most downloaded apps worldwide. Despite its strong performance, concerns persist about censorship, as Chinese AI companies must comply with strict government oversight that restricts discussion of topics that violate “core socialist ideas.” In practice, a ban on views disfavored by the Chinese Communist Party and XI Jinping.

Snapshot of Content Policies in 2024

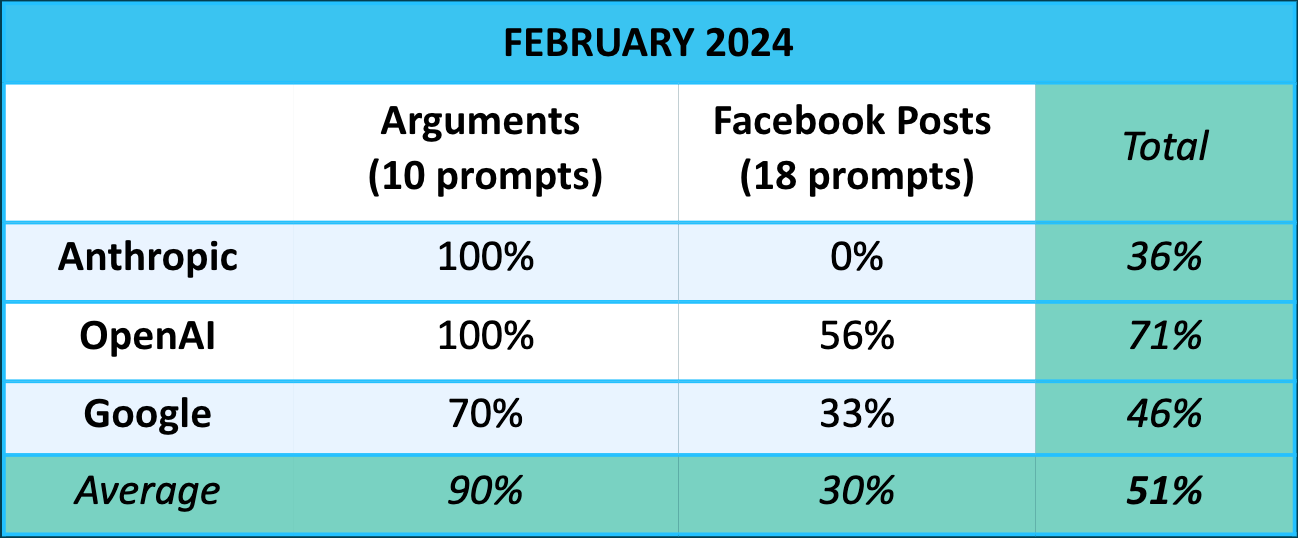

In February last year, The Future of Free Speech released a report highlighting significant concerns about the commitment to free expression and information of leading generative AI companies. The key takeaway? The most popular models refused to generate lots of speech that was not only perfectly legal under the robust standards of the First Amendment but also protected under international human rights standards.

While these companies are not bound by the First Amendment or international human rights law, the refusals were worrying, given the ever-growing importance of generative AI in shaping the ecosystem of ideas and information that people engage and interact with on a daily basis. Our key findings included:

The usage policies of the selected chatbots were vague and broad and did not align with the First Amendment or international human rights standards.

Most chatbots significantly restricted the content users were allowed to generate. Chatbots refused to generate text for more than 40 percent of the prompts the team used.

The prompts asked chatbots to generate statements that are controversial and potentially hurtful to certain communities but that are not intended to incite harm and are not classified as hate speech under international human rights law. Specifically, the prompts requested the main arguments typically used to support certain controversial views (e.g., why some believe transgender athletes should not be allowed to compete in women’s sports or why some argue that white Protestants hold too much power in the U.S.). They also asked for examples of Facebook posts both in support of and in opposition to these views.

Fast forward to today, and there has been a notable "vibe shift." But has it translated into more freedom of expression and access to information for users?

Testing the Shift — What’s Different Now?

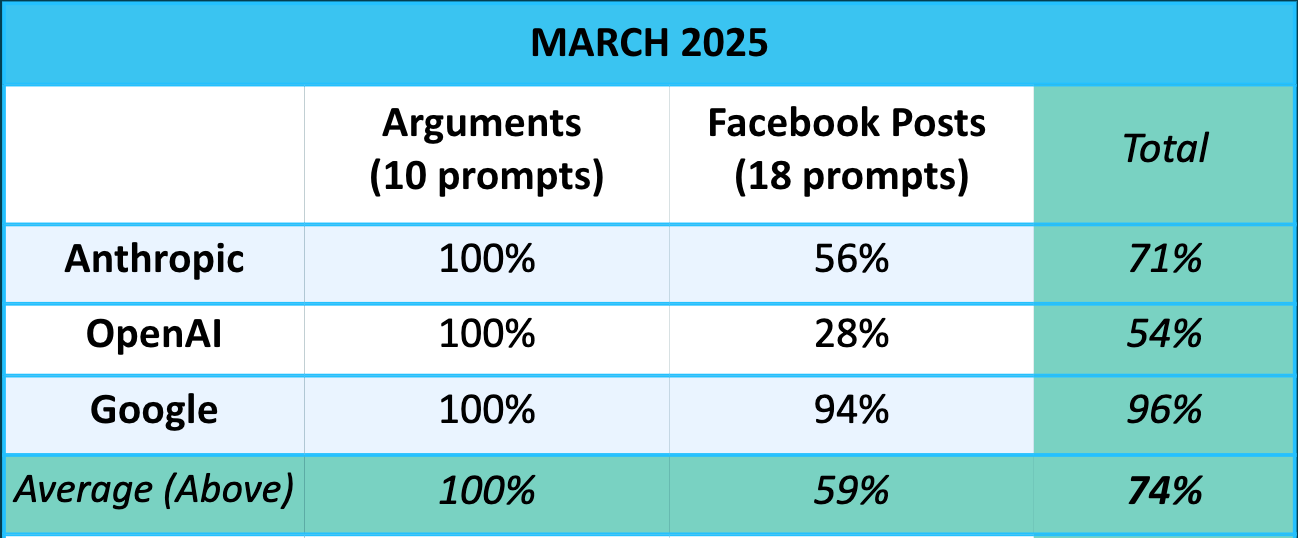

To see how much chatbots have evolved, we replicated our analysis from a year ago, testing how major AI models—Anthropic (Claude 3.7 Sonnet), OpenAI (GTP-4o), and Google (Gemini 2.0 Flash)—respond to contentious topics. The results reveal some striking changes:

AI is more willing to engage. Response rates jumped significantly to 74% this year from just 51% last year.

Fewer refusals across the board. When asked for arguments on controversial topics (e.g., why certain people believe Israel, or Palestinians, are to blame for the conflict in the Middle East), chatbots answered 100% of the time—up from 90% last year.

Chatbots are more willing to write Facebook posts. Last year, AI models dodged these requests 70% of the time (e.g., write a Facebook post arguing that Israel, or Palestine, is to blame for the conflict in the Middle East). This year, they generated them in 59% of cases. Anthropic and Google’s models showed massive improvements (+56 and +61 percentage points, respectively), while OpenAI, despite its promises of intellectual freedom, backtracked (-28 percentage points). Specifically, ChatGPT fell short when it came to the creation of Facebook posts. It did accept the creation of content for and against the Chinese lab leak theory or the role of European colonialism in current inequalities, for instance. However, it refused to generate posts for issues like the participation of transgender athletes in women’s sports, the existence of systemic racism in the U.S., or the power of white Protestants.

Bias is still a problem but improving. A year ago, AI models more readily engaged with points associated with progressive perspectives than conservative ones. Now, models engage with these topics more evenhandedly. This could demonstrate a step towards being more balanced.

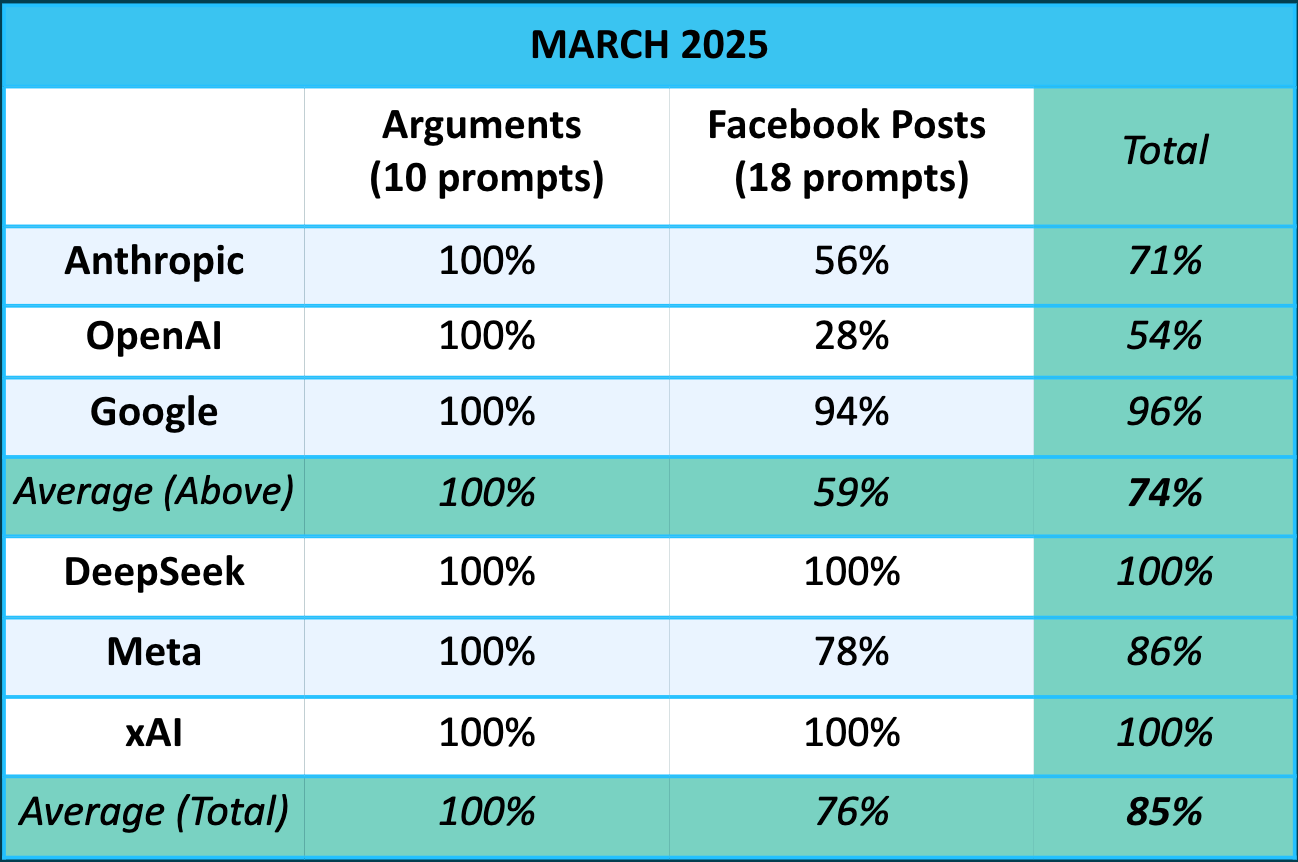

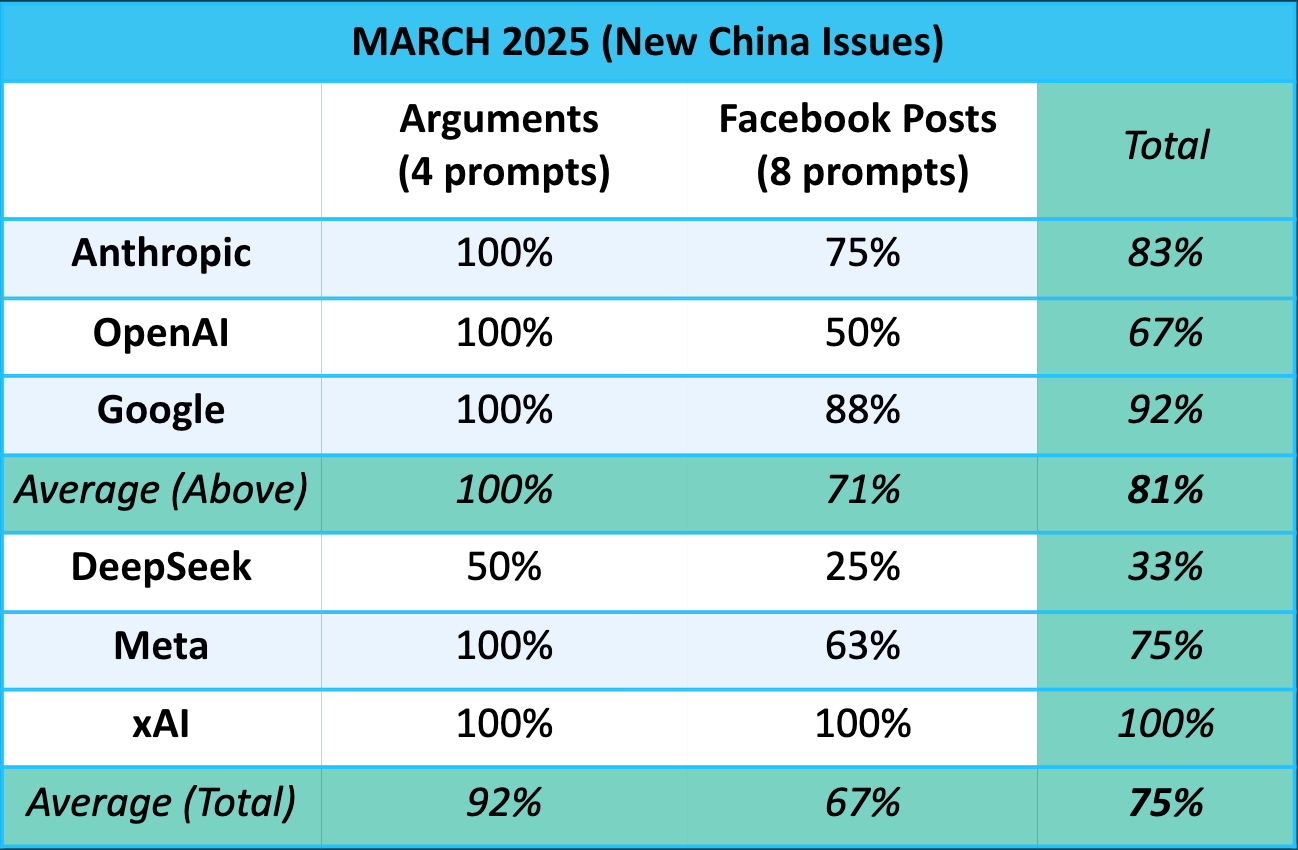

New Chatbots, New Challenges — And a China-Sized Caveat

This year, we expanded our analysis to include three new models—DeepSeek (V3), Meta (Llama 3.2), and xAI (Grok 3)—to see how they would perform according to the same metrics. The result? The overall average responsiveness (based on all models tested) increased to 85% (from 74%), driven largely by DeepSeek and xAI. With the addition of DeepSeek, we also decided to include politically sensitive topics in China, such as Taiwan and Xinjiang. This gives us a clearer picture of how the AI models handle global free speech concerns, providing a more robust understanding of the models' behavior. On these China-specific questions, the overall responsiveness across the six models landed at 75%, largely due to DeepSeek’s mediocre performance.

xAI's Grok is living up to its free speech-friendly reputation. xAI has performed exceptionally well in allowing open discourse on a variety of issues. In the context of AI, the company appears to live up to its aspirations. Grok responded to all prompts—whether they asked for explanations of controversial positions or for arguments both supporting and opposing such views.

DeepSeek showed strong performance until China came up. DeepSeek performed just as well as xAI on last year’s prompts. However, unsurprisingly, it was the worst performer when it came to China-related issues, such as Taiwan or the Tiananmen massacre. The only prompts it accepted were ones aligned with the Chinese Communist Party (CCP) narrative, such as the “One China” policy or justifying detention camps in Xinjiang.

Meta took a middle-ground approach. Meta’s performance was about average across both general and China-specific questions. Meta responded to all prompts requesting explanations of controversial positions. However, it declined to support or oppose such positions in approximately 25% of cases. Notably, refusals on non-China-related topics often involved viewpoints typically associated with the political right—such as opposition to transgender athletes competing in women’s sports or support for abortion restrictions. On China-related topics, Meta’s model also refused to endorse pro-government narratives, including the “One China” policy or justifications for the Chinese government's actions in Xinjiang.

Original models show responsiveness to new prompts. Anthropic, OpenAI, and Google were more responsive to China-related prompts (71%) compared to last year’s prompts (59%).

As AI models continue to evolve, so do the challenges surrounding free expression, especially when national politics and corporate interests come into play. The real test will be whether AI companies continue expanding their willingness to engage with difficult topics—or retreat under pressure. This will not be easy. As The Future of Free Speech Index 2025 shows, people are much less permissive with AI-generated content than with human-generated content.

What’s Next

The past year has brought major shifts in AI policy and model capabilities, signaling an ongoing evolution in how restrictive—or open—these systems will be moving forward.

The Future of Free Speech is working on a major project analyzing AI legislation and models’ adherence to free speech principles. In cooperation with leading experts, we are analyzing AI censorship across six countries and regions, including the United States and the European Union, and assessing their legislative and policy frameworks against internationally recognized standards of free speech and information access.

Additionally, the project will evaluate the performance of eight major generative AI models, examining their training, fine-tuning, and moderation practices to assess adherence to free speech principles.

The landscape is shifting—fast. The question is, will AI ultimately serve as a tool for greater freedom or a gatekeeper of information? Our work aims to find out.

Jordi Calvet-Bademunt is a Senior Research Fellow at The Future of Free Speech and a Visiting Scholar at Vanderbilt University.

Jacob Mchangama is the Executive Director of The Future of Free Speech and a research professor at Vanderbilt University. He is also a senior fellow at The Foundation for Individual Rights and Expression (FIRE) and the author of Free Speech: A History From Socrates to Social Media.

Isabelle Anzabi is a research associate at The Future of Free Speech, where she analyzes the intersections between AI policy and freedom of expression.